eXtensions - Thursday 20 November 2025

By Graham K. Rogers

Education should not be the mechanical learning of subjects, but training of the mind to think. - Nikola Tesla

Although machine learning has existed for a number of years, the recent, wider availability of of artificial intelligence (AI) chatbots such as ChatGPT has seen a greater output of AI content, some of which I find problematic. My main area of work is in academic writing (and writing in general). I accept that there are ways in which AI is useful. I am deeply disturbed by the AI output I have seen, and equally worried by the senior academics who believe this use of technology is the right way forward with writing.

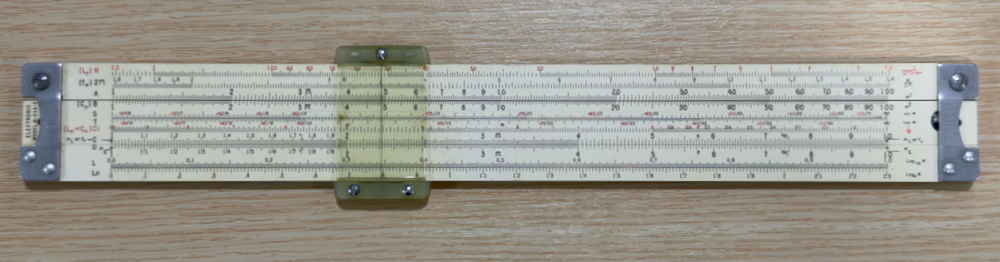

He continues that, with the move from the slide rule, completely to the use of the calculator in the 1970s, Engineering faculty members "did not ask whether students would lose their feel for the deciml point if the calculator handled it all the time", and later notes that with the assimilation of the calculator and the computer, "Some structural failures have been attributed to the use and misuse of the computer. . . ." With the advance in technology that made complex calculations easier, something was lost.

In the past, I have helped students who were examining the use of AI as a way to detect problems on X-rays that might not otherwise be found by non-specialist doctors in smaller rural hospitals. Two projects I saw looked at X-rays of Covid patients, and from patients who might have cancer of the lung. With a suitable training process false positives could be excluded and (as important) X-rays from other patients could be flagged for further examination.

Another area of some value is the examination of large amounts of data, for example in astronomy. Images from the newer, high-quality telescopes can be examined more throughly, and far more quickly, identifying areas worthy of further examination. Sorting through data - and at speeds impossible for humans - is a strength of AI systems. This can help researchers draw conclusions more easily with the datasets they are working on. Other examples of the way AI can handle massive datasets is helping to understand and predict earthquakes. Recently, an AI chatbot helped a family in the USA reduce a medical bill of $195,000 to $33,000 Mark Tyson (Tom's Hardware) [2].

There are of course, several potential applications in engineering. The Neural Concept [3] site outlines five: 3D printing of structures; digital twins, to help predict system behaviors; automation and robots; autonomous systems, for dangerous or repetitive tasks.

Although scientists and engineers have been using AI in various ways for several years, recent expansion seems to have been pushed as much by financial markets and investor excitement. The value of companies developing the technology (and the chips needed) has increased by several billion dollars (NVidia hit the first corporate valuation of $5 billion at the end of October). The booming technical markets are already causing some to be concerned about a potential bubble NBC News [4]: the AI edifice is built on sand. Everybody is chasing this elusive will-o'-the-wisp, but (to mix metaphors) like Alice they are having to run faster to stay in the same spot.

I am far from convinced about the value of AI in academic writing by what I have seen so far. Following the excitement and publicity generated by financial commentators, many in academia have rushed to embrace this new slant on technology as if it is the answer to all problems. This applies particularly with regard to academic writing in the second language environment I work in. There are three components to a graduate degree: learning skills and acquiring knowledge; conducting research to prove a hypothesis; writing the thesis. In my opinion writing the thesis is as much a part of the degree as the research element.

Writing in Nature, Helen Pearson [5] outlines a number of these concerns. A search will reveal several more such articles. Some are worried that "students are using AI to short-cut their way through assignments and tests, and some research hints that offloading mental work in this way can stifle independent, critical thought." Some academics are angry that universities are allowing the use of these tools. In a small study it was found that "Students using only their brains showed the strongest and widest pattern of connectivity and were usually able to later recall what they'd written. The LLM-using group showed the weakest connectivity [. . .] and could sometimes barely remember a word of their prose."

Most of the undergraduate students I come into contact with are Thai. Many of my Thai colleagues have completed graduate degrees at universities in other countries (Australia, Austria, UK, USA, et al) and have stronger English. Graduate students come mainly from Thailand and other parts of South-east Asia; from South Asia and Nepal; as well as other countries (Afghanistan, Iran, Turkey, USA). Those from South Asia and Nepal have specific problems related to the colonial influence on education (the system in Nepal was developed by an Indian). Their English output has a particular style, with two (or more) subjects, followed by two objects. In The Reader over your Shoulder (2017 version), Robert Graves [6] (writing in 1943) identifies this style as "Doublets" which were commonly used in religious texts in England. He adds that Doublets suggest legal phraseology and so give an air of authority to a sentence. I speculate that this style was introduced to South Asia in the colonial era and is still taught there. A 1936 text book (High School English Grammar & Composition by Wren & Martin) [7] published in India was recognized by a student from Pakistan as the same work he had used when at school.

In the past, written English output here was usually direct translation. Much still is, and I am able to recognize these problems: word-choice, word order, grammar, verb endings, specific constructions et al. Universities and some academic publishers now accept AI output, with the proviso that the writer shows that AI has been used and AI is not the main author. This is a smoke screen. Work is being produced that reeks of AI to the detriment of the organizations and writers involved. By delegating the writing task to AI, the hard work involved in learning how to produce effective content is no longer taking place.

Learning the basics creates a foundation on which to build. I take better digital photographs because I know how to use film. There were no digital cameras when I was a child, and I still use film now. Writing was an everyday task at my Junior and Grammar schools, but my professional writing apprenticeship began when I was a policeman in the 1970s. I was initially shown how to write simple reports and produce evidence for the courts from them. The difficulty increased as I dealt with more complex problems (multi-vehicle accidents, burglaries, crime sprees). Later, I needed to develop more complex (and high quality) files for the office of the Director of Public Prosecutions. And then I went off to university.

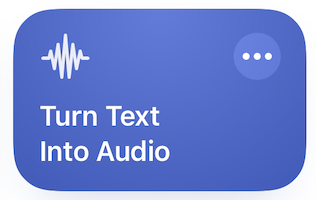

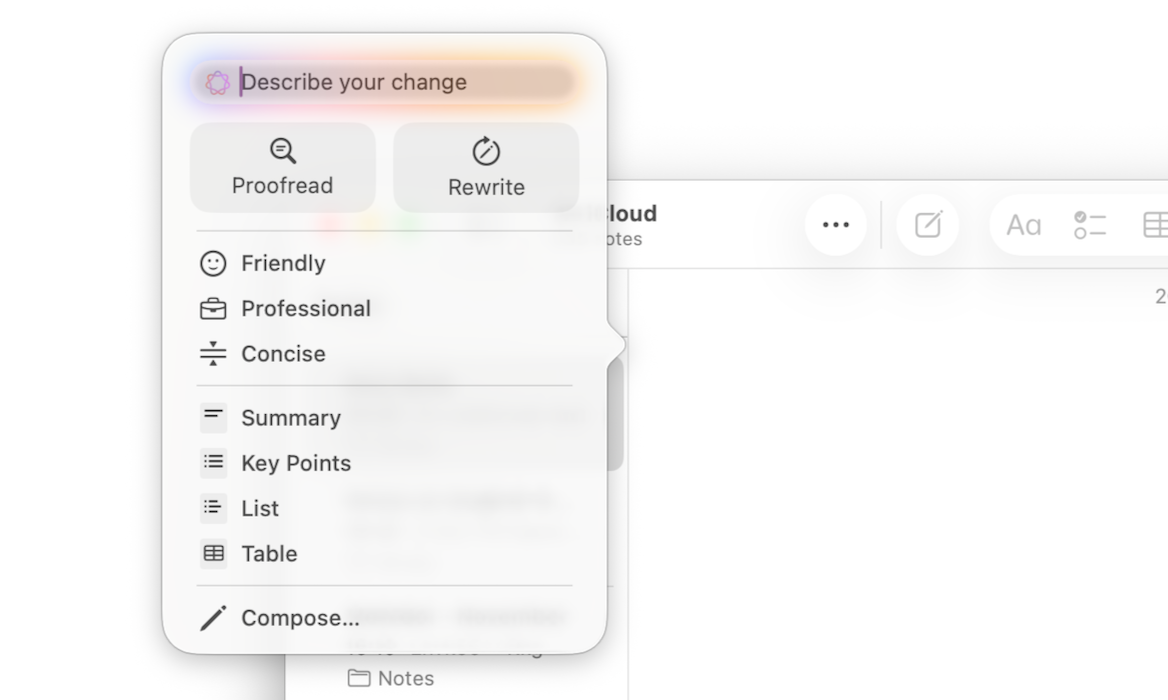

I added to the speak feature on the Mac a few years ago when I had to make a conference presentation in Kuala Lumpur with another Services option: add to Music as a Spoken Track. The file was exported to iTunes and synchronized to my iPod. I was able to listen to the full presentation several times before the event. I have added to this with a Shortcut that I have on all my devices that turns text into speech, although this needs some copy & paste work first. Dr. Drang is correct here. Listening to a text is an effective aid to proofreading. I found this when I produced a weekly podcast some years ago. As I read the text out, errors that I had not noticed before became apparent and could be fixed.

I added to the speak feature on the Mac a few years ago when I had to make a conference presentation in Kuala Lumpur with another Services option: add to Music as a Spoken Track. The file was exported to iTunes and synchronized to my iPod. I was able to listen to the full presentation several times before the event. I have added to this with a Shortcut that I have on all my devices that turns text into speech, although this needs some copy & paste work first. Dr. Drang is correct here. Listening to a text is an effective aid to proofreading. I found this when I produced a weekly podcast some years ago. As I read the text out, errors that I had not noticed before became apparent and could be fixed.

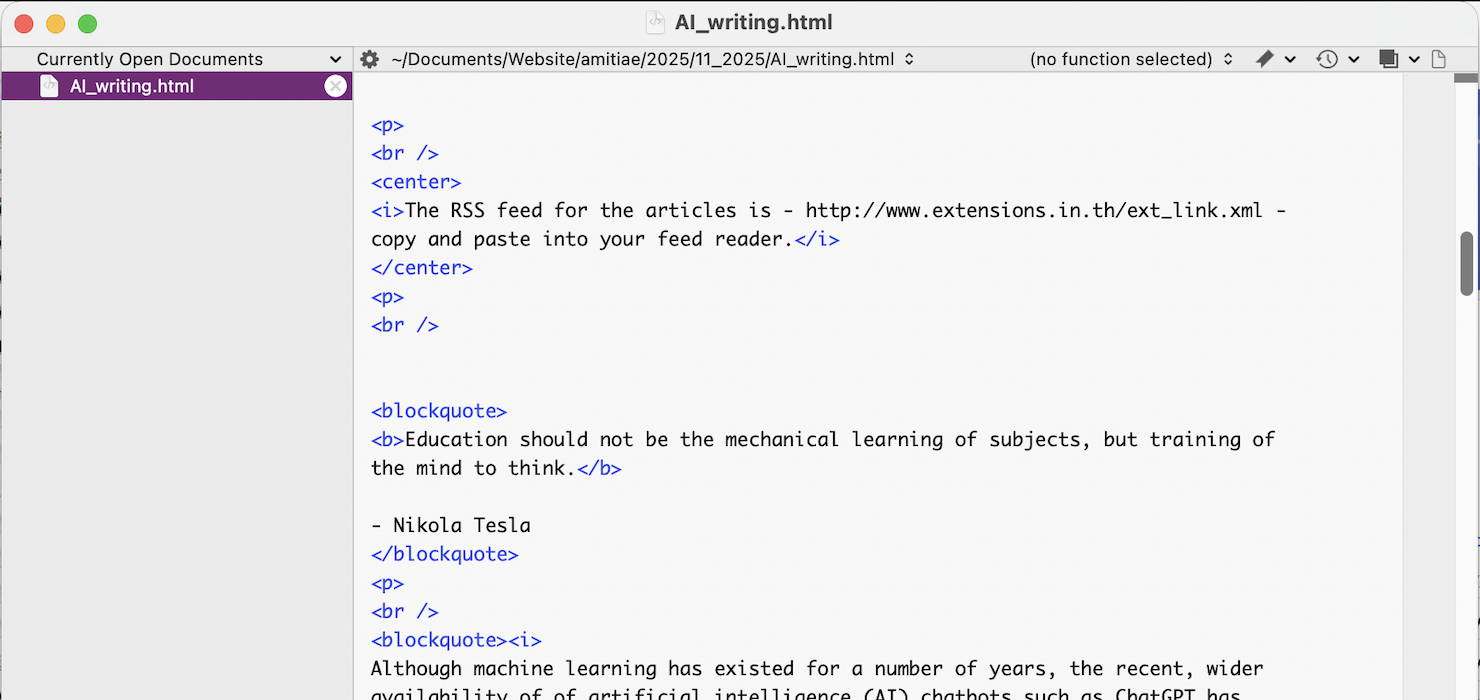

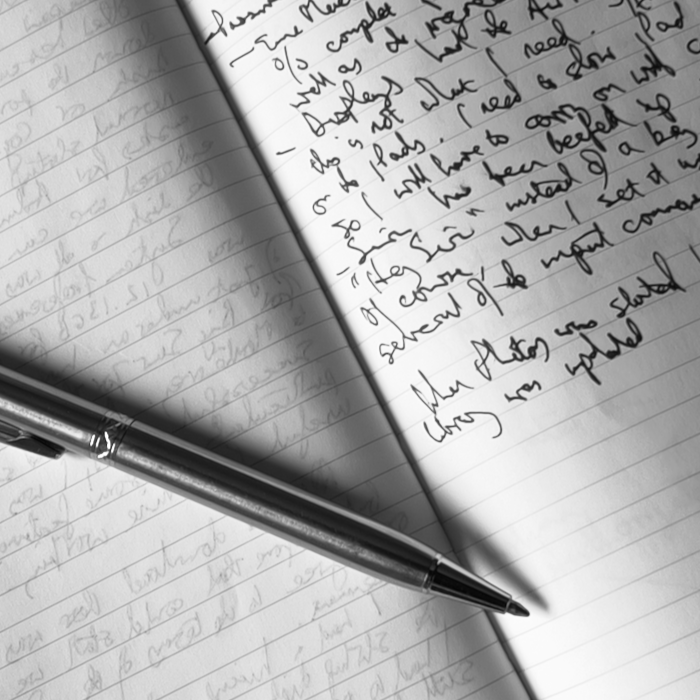

I also find that a change in medium helps. I am writing this in a notebook. It helps me to think and I can develop ideas as I am writing. The handwritten content is typed into a text editor: ASCII text; no formatting (BBEdit). Many writers make what I think is an error by starting in Word or a similar application. Formatting can be done at a later stage. The process should begin with the collection and control of information. Notes are fine, perhaps some proto-sentences; but worrying about grammar at this early stage bogs the writer down. I write paragraphs in my head while I am walking along, so some of my content is almost complete. That is less likely for many of those I advise here as their ideas are developed in their native language. Further work on the content - word choice, word order, grammar, direct translation - will be done as the work evolves. The first drafts are not expected to be perfect.

Writing of a first draft does not necessarily happen in one go. Initially it does not have to be complete. A literature review can be built piece by piece, the Results can be written before the Method (although the writer should keep notes of the steps taken). The Abstract is read first, but should be written last. The first written draft should be examined for the right balance of content (ideas not needed; other ideas to be added). There is little point in working on grammar if some parts are to be changed or deleted. At this stage, I type into a text editor and make several changes to content while typing, sometimes adding new ideas.

With that second draft in ASCII text (no formatting) I make several checks. Content is altered in a number of ways: writing is not concrete. Depending on the final use of the text, I will either use HTML markup for the web, or paste it into a word processor to produce formatted content. I will either check this almost-complete work in a browser or in the word processing application. From the notebook, to the text editor then formatted, I have used three media to view the text. Each change allows me to look at the writing in a different way. The eyes do not see the same thing each time.

A second pair of eyes will also see problems the writer has missed, which is one of the ways I help students and academics. For my own writing however, I may not have that luxury. Instead I use Time. If the content is a web article and I have set myself a deadline, I will walk away from the computer for at least an hour (say, over lunch). On my return I may spot something I had missed before. A day is better; or more.

I am able to adapt this when teaching certain undergraduate classes. When writing project proposals, I instruct students from the Electrical Engineering Department to produce their first handwritten draft with sentences no longer than 10 words. They must think carefully about the English grammar rather than copying a direct translation, and it is far easier for me to spot any attempt at plagiarism.

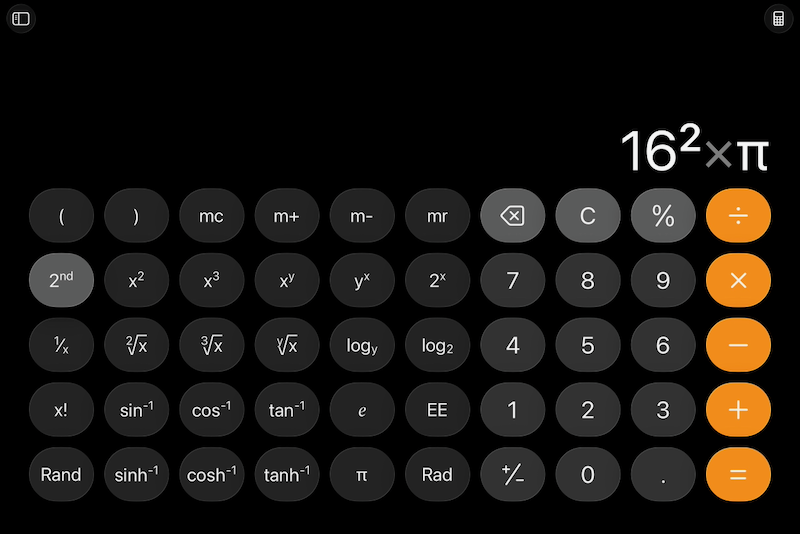

The Dean of the Faculty of Engineering asked me if I had ever used AI. My answer at that time was negative, but a short time later, a further Apple update to iPadOS provided access to ChatGPT so I tried. I asked ChatGPT to write a paragraph on problems with AI. A skilled writer might skew the content a little, but AI is so guileless that it was able to produce a few sentences with a reasonable argument. This was the result:

One of the significant disadvantages of writing with Artificial Intelligence is the potential erosion of creativity and critical thinking skills. When students or writers rely too heavily on AI-generated content, they may miss the opportunity to engage deeply with their subject matter, leading to a superficial understanding of complex topics. AI tools often provide ready-made responses that lack the nuance and depth that come from human thought processes. This can result in writing that is formulaic and lacks originality. Additionally, over-reliance on AI can diminish the writer's personal voice and style, making all outputs seem homogenous and less engaging. Consequently, while AI can aid in efficiency, it poses a risk to the development of essential skills in analysis, synthesis, and personal expression within academic and creative writing.

But it was not me. I have marked (in italics) some words that I would not use. There are also too many conjunctions ("and"); also the use of multiple subjects and objects:

This is where I am able to see problems with AI output that many non-native speakers of English cannot. They accept the output at face value and without checking. That was illustrated more recently when a Thai colleague came to me with her own variation of the same problem. She had been asked to write a letter of recommendation for a student who wanted to attend a university in Taiwan. Using an AI source, she had produced an acceptable text, but felt the letter was not hers, although (as she explained) she was unable to put her finger on just why it felt wrong.

I wrote on this in an online comment (10 Oct 2025) [9]. I was able to identify two problems in the first four words: a grammar point and an unctuous adjective. As I went through, I explained some of the finer points of the constructions, and (especially) the words used, some of which were not quite right: AI malapropism. Thai writers of English use a lot of adverbs when they translate into English. It appears that ChatGPT also has this characteristic. I advised, Cut, cut, cut, starting with adverbs and nouns.

I am usually asked to look at work that is already complete. Sometimes the content has been returned by a journal with the recommendation that the writer take advice from a native speaker of English. That used to mean the grammar and word choice needed input, but nowadays I find that the output I am asked to check has been created by AI, or has been put through an AI grammar checking process. As above, the writer is unable to see any problem with the content, but I am immediately aware that the writing is unreal. There is a certain shallowness to the word salad on the page that years of reading high-quality input, and of writing, can identify.

I had been attuned to the translation-generated output that was the norm here, and could easily identify the occasional plagiarism: sometimes just a forgotten reference. A couple of years ago I began to notice odd word choices: particularly the word, "delve" creeping into articles and thesis work. With the particular styles of writers from South-east Asia and South Asia, it is easy to see when a text has input from elsewhere. I am usually able to find the original source from an online search. However, at about the same time that Delve was beginning to appear, I found some content that was clearly not written by the students, but could not be proved to be plagiarized text. Fortunately the students were honest when asked about this and admitted to using an online paraphrasing tool.

My position is that a degree is not simply a piece of paper but a learning experience, and that part of a graduate degree includes not just the research but the writing of the thesis. Plugging in artificially generated content defeats the object of that part of the experience and those who use AI sources lose something from the creative part of the experience. As illustration, Myke Bartlett (Guardian) [10] writing about his preteen children creating a song using ChatGPT, told them, "You've missed the fun part". When discussing creativity in his look at how AI is being used more and more, he adds that "the process is the point". That development and growth is also missing in the writing if the AI just churns it out, even without considering the bland nature of the output.

I mention above the word salads that seem to be a hallmark of AI-generated content. Another problem, I have noticed is that the so-called grammar checkers that some students use also tamper with the original word choice. When editing I may suggest a better alternative (crucial, critical, essential, urgent, significant, important, necessary) or insist on a change when the wrong word is used (e.g. converse/conserve; exacerbate/exasperate), but like a sub-editor at a newspaper here who I had the misfortune to come across, the software may sometimes just change a word for no reason, even when the writer was correct: diluting the authorship.

I mention above the word salads that seem to be a hallmark of AI-generated content. Another problem, I have noticed is that the so-called grammar checkers that some students use also tamper with the original word choice. When editing I may suggest a better alternative (crucial, critical, essential, urgent, significant, important, necessary) or insist on a change when the wrong word is used (e.g. converse/conserve; exacerbate/exasperate), but like a sub-editor at a newspaper here who I had the misfortune to come across, the software may sometimes just change a word for no reason, even when the writer was correct: diluting the authorship.

It defeats the object of editing if after an expert has examined the text and made suggestions, the original writer puts the content through the AI check again. I saw this more than once on a paper I checked recently that had been returned with a comment that it should be examined by a native speaker. Each time I noted the same problems.

This was also illustrated while I was writing this article, when I was asked to look at the English in AI-generated content about a student project that won an award in Taiwan recently. I was troubled by the information that the group had won a "Certificate of Earthquake-Proved Award." This troubled me. Something at the back of my mind told me to look again. When I had checked the rest of the passage - so many unnecessary words - I realized that the correct term was "earthquake-proof": implying protection (like fireproof). The non-native speakers who had prepared the text, probably from a translation (itself a potential source of problems) would not have been able to identify the error in the content produced from the AI output. That the process where I work includes a native speaker (me) to check the English output, guards against such problems, but other output may not be checked so carefully.

That point about nagging doubt has appeared several times while I am editing; nothing I can put my finger on immediately, but something is not right. In contrast, the AI has total self-confidence. In one (non-AI) paper I was unhappy with the frequent use of the term, "mind problems". In the context of urban development related to public transport, this was perhaps possible, but I had a nagging doubt. The error was neither AI nor translation but pronunciation. In going from Thai to English, the character English speakers pronounce as L can be changed to N. The university where I work is named, Mahidol, but this is pronounced Mahidon. The correct wording was that there were mild problems. AI might not recognize that difference.

I try to convince my students and colleagues, to stay away from AI as if it were a swarm of bees. To be scrupulously honest, I remember this phrase from an old Apple Support document (no longer available), dating from the time of OS X, Tiger: "As if it were a swarm of bees, you should stay away from the SyncServices folder." It was unusual coming from Apple, but it caught the reader's attention right away and conveyed the risks perfectly.

One of the more valuable courses that I studied as a graduate at Illinois State University, was Stylistics, taught by the late Maurice Scharton. I have found this particularly useful in Thailand, where I often examine student output. Thais, and other Southeast Asian natives, write English in a particular way and the translated output is easy to identify. Those from South Asia, do not translate, but write English in a way that is also easy to recognize. This probably comes from the teaching in colonial times. With its multiple subjects and objects, and frequently a complex vocabulary choice, output can be compared to the British ecclesiastical and legal writing of the 19th century (Graves) [6]: designed to impress. This is easy to spot.

When a writer switches from the SE Asian translation or the South Asian doublet approach to sentences with the style of a textbook, journal or news source, further investigation is required if the writer has failed to provide a reference. It may be that the writer has omitted this in error. Some students, particularly undergraduates, will deny that there is plagiarism, even when the original source has been presented to them. AI makes this far less easy to police. It may be clear to me that the style has changed mid-paragraph, but I will not be able to track down the source before confronting a student. In either case, it needs a careful approach when discussing such problems with the writers, particularly when some authorities are willing to accept AI output, as long as the writer confirms that AI was used. In my opinion, this fails to cover the question of source, when the content is artificially produced and the writer has had little control over the text.

I tend to be somewhat forgiving of undergraduate students in Thailand when I first spot problem content that is possibly plagiarized. In high schools here, this is how they are taught to product reports. When I first came to Thailand, it was common to spot students Xeroxing books or newspapers, then pasting that content onto pages that they would staple together and submit with a nicely-designed cover. The internet changed that. Instead of Xerox, the students can copy the content they need directly from the computer screen and use the Paste command to add it to the report.

The use of AI is only slightly different and produces what looks like good English text, without the need for composition and translation, which would inevitable produce less than perfect content. The act of translation uses words or phrases from the native language and puts them into the target language without the necessary task of making the content true with regard to that target language. Anyone who has read the works of Virgil, Camus, Goethe, Dostoevsky or others, in English, is also relying on the skills of an expert translator. I can confirm that the English versions of the Aeneid or La Peste are not identical to the originals in their respective Latin or French. Thai writers of English leave a number of clues in the text that reveal the use of translation. These include wrong prepositions, verb tense problems, word choice and word order, and the excess use of adverbs, especially Moreover and Furthermore.

Text that has been produced by AI or an AI grammar checker also has markers that indicate the potential source. I was alerted to these some 2 years ago when I began to see the use of "delve" in some graduate papers. I am aware of its meaning and have seen it used a number of times, particularly in conjunction with the verb, dig. A common nursery rhyme, which I would have heard first in the 1950s, begins, "One, two, buckle my shoe" continuing through other numbers through "Eleven, twelve, dig and delve", and beyond. Before these papers began to appear, I had not seen it used here.

AI output has a particular preference for certain words, especially "critical" and "crucial" although these are stronger than may be needed. There are subtle differences in meaning between crucial, critical, essential, urgent, significant, important, and necessary. In a similar vein, AI grammar checking software has an annoying tendency not only to identify grammar problems, but to arbitrarily change a writer's original word choice for a synonym, even if the original word was correct (e.g. important to critical). There are a number of sources that discuss the use of words that may indicate an AI source, including an accessible article by Margaret Ephron (Medium) [11].

As well as specific words that suggest AI has been used, the content produced is often too long. It has too many words. I used the term, "Word salad" to describe this (above). I explain to students that I would not write it in this way. The students, however, with their limited experience of content are unable to identify that the text could be improved, particularly by removal of several unnecessary words. Writers are also unable to identify wrongly-used words (Earthquake-Proved/Earthquake-Proof). The content is not wrong, but it could be considerably improved.

This is examined by Ayyoob Sharifi (Nature) [12], and more recently, Emily Hung (SCMP) [13]. The Los Alamos National Laboratory [14] carries an article that warns writers to be wary of using "legitimate looking reference citations".

Similar problems also exist in the Law, with several examples of false information provided by AI including Josh Taylor (Guardian) [15]; Joe Wilkins (Futurism) [16]; Samantha Cole (404 Media) [17]; et al.

From the cases I have seen when the references appear to be false or non-existent, most are in articles written by graduate students, who are perhaps (at best) too trusting and more likely to trust the AI rather than verify. The responsibility for content is theirs of course.

That students, and the academics who are paid to advise them, are willing to accept this, indicates an abdication of responsibility. The approach by many students who are using AI should not be a case of Trust but Verify (doveryai, no proveryai) Akash Wasil (CIGI) [18]. With the number of errors - factual and grammatical - the approach should be, Do not trust. Verify.

The use of AI in academic writing is a short cut to disaster

Although links to articles (when available) are given in the text, I thought it advisable to provide an academic list of works cited.

[2] Tyson, M. "Grieving family uses AI chatbot to cut hospital bill from $195,000 to $33,000 - family says Claude highlighted duplicative charges, improper coding, and other violations". 29 October 2025. Tom's Hardware. https://www.tomshardware.com/tech-industry/artificial-intelligence/grieving-family-uses-ai-chatbot-to-cut-hospital-bill-from-usd195-000-to-usd33-000-family-says-claude-highlighted-duplicative-charges-improper-coding-and-other-violations. Accessed 20 November 2025

[3] "The Top Applications of AI in Engineering You Should Know". 2025. The Neural Concept. https://www.neuralconcept.com/post/the-top-applications-of-ai-in-engineering-you-should-know Accessed 13 November 2025

[4] Jackson, H. "Booming stock market led by tech has some saying it feels like the 1999 internet bubble". 14 October 2025. NBC News. https://www.nbcnews.com/video/booming-stock-market-led-by-tech-has-some-saying-it-feels-like-the-1999-internet-bubble-249808965666. Accessed 13 November 2025.

[5] Pearson, H. "Universities are embracing AI: will students get smarter or stop thinking?" 21 October 2025. Nature. https://www.nature.com/articles/d41586-025-03340-w. Accessed 13 November 2025.

[6] Graves, R. and Hodge, A. The Reader over your Shoulder. Seven Stories Press; New York, 2017.

[7] Wren, P.C. and Martin, H. High School English Grammar & Composition. Blackie, Kindle ed. 1995.

[8] Dr. Drang. "Proofreading with ChatGPT" 12 October 2025. All This. https://leancrew.com/all-this/2025/10/proofreading-with-chatgpt/ Accessed 12 October 2025.

[9] Rogers, G.K. In "Friday Notes: Underdogs; Expensive Risks from Cheap Batteries; Apple Watch; AI Wariness" 10 October 2025. eXtensions https://www.extensions.in.th/amitiae/2025/10_2025/cassandra_10_10_1.html Accessed 10 October 2025

[10] Bartlett, M. "When my kids wrote a song using AI, all I could think was: you missed the fun part". 20 October 2025. The Guardian. https://www.theguardian.com/commentisfree/2025/oct/21/kids-wrote-song-using-ai-artificial-intelligence-missed-fun-part-opinion. Accessed 20 October 2025.

[11] Ephron, M. "Words and Phrases that Make it Obvious You Used ChatGPT"". 21 May 2024. Medium. https://medium.com/learning-data/words-and-phrases-that-make-it-obvious-you-used-chatgpt-2ba374033ac6. Accessed 11 November 2025.

[12] Sharifi, A. "Tackle fake citations generated by AI" 5 August 2025. Nature. https://www.nature.com/articles/d41586-025-02482-1. Accessed 20 November 2025

[13] Hung, E. "Journal defends work with fake AI citations after Hong Kong university launches probe" 11 November 2025. SCMP. https://www.scmp.com/news/hong-kong/education/article/3332120/non-existent-ai-generated-references-paper-spark-university-hong-kong-probe Accessed 20 November 2025.

[14]"Don't get ghosted: Beware of ChatGPT generated citations" Los Alamos National Laboratory. https://researchlibrary.lanl.gov/posts/beware-of-chat-gpt-generated-citations/ Accessed 11 November 2025.

[15] Taylor, J. "Lawyer caught using AI-generated false citations in court case penalised in Australian first." 3 September 2025. The Guardian https://www.theguardian.com/law/2025/sep/03/lawyer-caught-using-ai-generated-false-citations-in-court-case-penalised-in-australian-first Accessed 20 November 2025.

[16] Wilkins, J. "Judge Blasts Lawyer Caught Using ChatGPT in Divorce Court, Orders Him to Take Remedial Law Classes" 8 November 2025. Futurism. https://futurism.com/artificial-intelligence/judge-lawyer-divorce-chatgpt Accessed 20 November 2025.

[17] Cole, S. "Lawyer Caught Using AI While Explaining to Court Why He Used AI". 14 October 2025. 404 Media. https://www.404media.co/lawyer-using-ai-fake-citations/?ref=daily-stories-newsletter. Accessed 15 October 2025.

[18] Wasil, A. "'Trust, but Verify': How Reagan's Maxim Can Inform International AI Governance". 11 September 2024. CIGI. https://www.cigionline.org/articles/trust-but-verify-how-reagans-maxim-can-inform-international-ai-governance/ Accessed 14 November 2025.

Graham K. Rogers teaches at the Faculty of Engineering, Mahidol University in Thailand. He wrote in the Bangkok Post, Database supplement on IT subjects. For the last seven years of Database he wrote a column on Apple and Macs. After 3 years writing a column in the Life supplement, he is now no longer associated with the Bangkok Post. He can be followed on X (@extensions_th). The RSS feed for the articles is http://www.extensions.in.th/ext_link.xml - copy and paste into your feed reader. No AI was used in writing this item.

For further information, e-mail to

Back to

eXtensions

Back to

Home Page