|

|

Graham K. Rogers

To use the internet in those days, even for simple mail, needed one to use unix commands. As a consequence, having also started my computing life using MSDOS 2, I have a lot more command line experience than my students despite being a Mac user. I even teach this stuff here. Mind you, Unix was one of the joys, for me, of the move to OS X: the idea that the operating system itself was accessible and that things could be changed underneath. A simple example of this came today when I needed to check on an IP number that might be related to someone's problems with Leopard. I put the details into Lookup, in Network utility and sat there waiting. I also tried whois and even traceroute. Not a lot was happennng, so I fired up Terminal and typed in whois 124.x.x.x and a reply came back a few seconds later, not known in the arin database. Well, probably not as this is the Asia-Pacific area. So, to find out, I used the man command, with whois and it showed me that I needed to fine-tune my search with the switch, "-A" and so whois -A 124.x.x.x got me the result I wanted. All this, of course, took a couple of seconds, much shorter than it takes to read (and certainly much shorter than it takes to write).

There are times when we need to work offline -- I have one of these right now owing to the snail-like speeds in my office (I go home to work properly). Or, when developing a website, we might want to download the entire contents to ensure the reliability. UNIX does have tools for this, as it does for many of the system-related tasks we carry out. I had not had much success using the "curl" command, or (when I installed it) "wget". For example, if you simply type the curl command plus a URL you see the file echoed to the Terminal screen: copy and paste are brought into play then. All a bit untidy if you are not wholly familiar with such commands. Wget is now available as a Universal Binary for OS X via the VersionTracker site. It works easily enough -- pretty similar to curl (basically) -- but most users of computers these days just are not going to bother with the command line and its complexities, despite its accuracy and speed when you get it right.

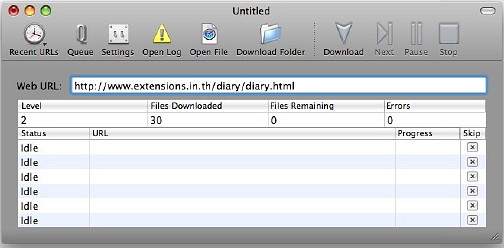

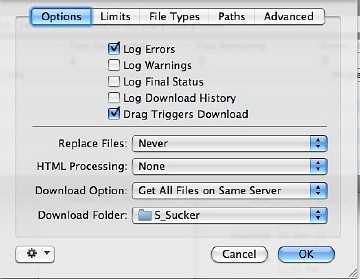

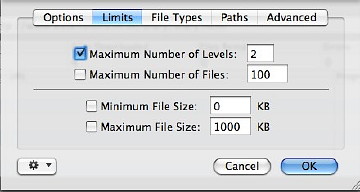

It is a fairly straightforward utility that -- a favourite term of mine -- does one thing and does it well. Once you conquer a few idiosyncracies that is. It is not just a simple task to allocate a site and/or directory, then press go. The chances are that, unless you are at the top level, or the web developer is a purist, the default filename (index.html) will not be used and SiteSucker will return an error. It is also unwise to leave it to do its job without some ocnstraints, The very nature of hyper-text is that it links to other pages and even sites. Let it roam and you will begin to notice the downloads filling up with information that the first site had linked to. I had a vision while I was testing the software on Bangkok Diary pages, that sooner or later it would link to Apple and would then attempt to download the entire website onto my 120G hard disk. My first attempts, then, without reading any instructions (who does? honestly?) gave me several errors. When I went for the specific diary.html file, that linked to all the pages within the "diary" directory structure, but kept going.

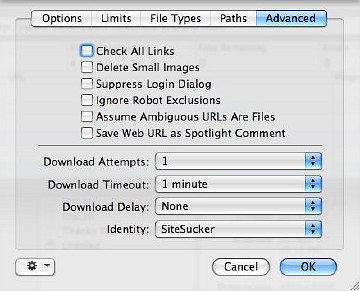

A Paths panel is for that careful fine-tuning that some sites will need, for example to include the images in the files in my test directory. Just as useful is the section for paths to exclude. As if these choices were not advanced enough, there is an Advanced panel that allows a user to include some extra care in making site downloads. Of use here, for example, might be the Identity button which allows spoofing of the browser. So many sites in Thailand pretend to be IE6 only which are easy to cheat with Firefox and Safari (if the Debug menu is active). Choices here are Camino 1.0, Netscape 7.2, Safari 2.0, and IE 6, as well as SiteSucker itself and "None."

As a test tool for a developer, or a utility to assist those who still have restrictions on time or data downloads, or even for a teacher who wants to display a complete website to a class, SiteSucker is great little utility from a developer named Rick Cranisky, whose SiteSucker site has instructions, help, downloads and a link to PayPal for (worthy) donations. It is also considerably easier than curl or wget.

|

|

I have a sort of love-hate relationship with Unix. I grew up with the internet in Thailand when the first connection was a backbone system that connected to Melbourne in Australia several times a day via a dialup link from the Prince of Songkhla University in Hat Yai -- conveniently close to Songkhla where the submarine cable disappears into the sea.

I have a sort of love-hate relationship with Unix. I grew up with the internet in Thailand when the first connection was a backbone system that connected to Melbourne in Australia several times a day via a dialup link from the Prince of Songkhla University in Hat Yai -- conveniently close to Songkhla where the submarine cable disappears into the sea.